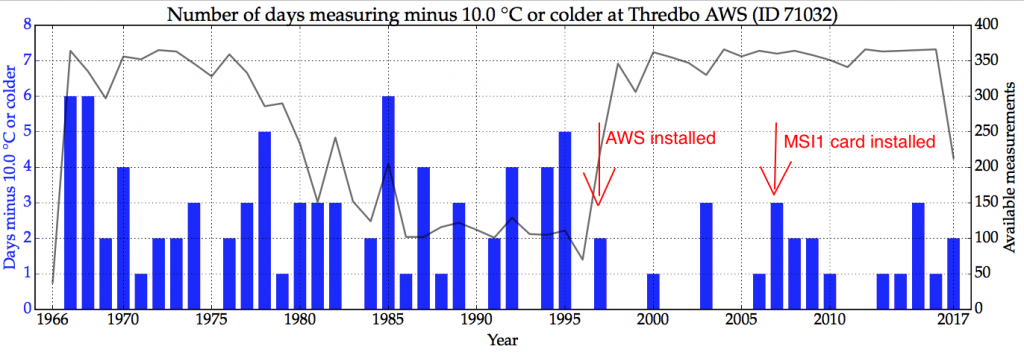

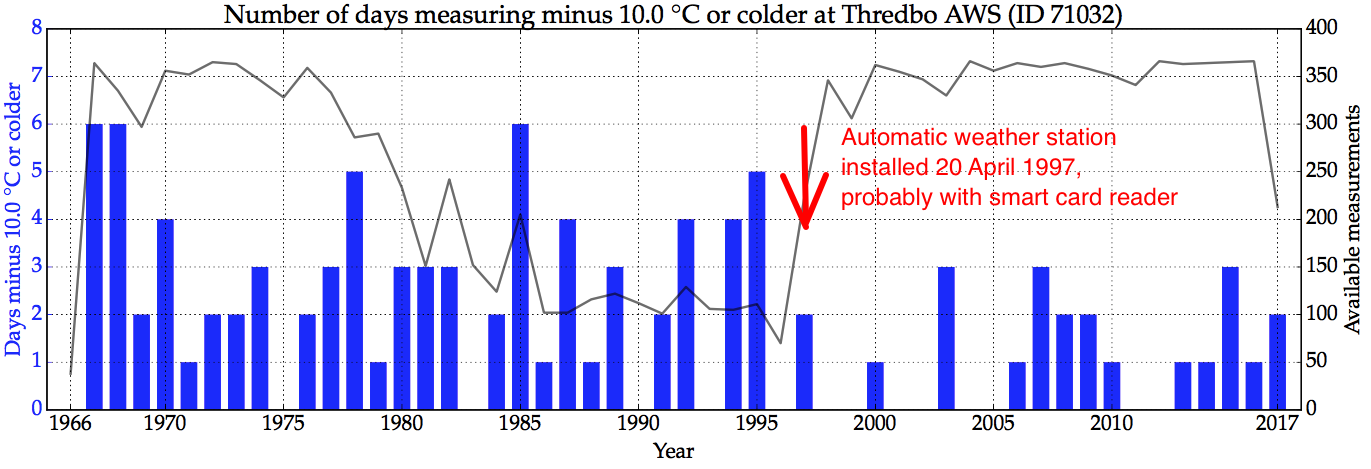

TWO decades ago the Australian Bureau of Meteorology replaced most of the manually-read mercury thermometers in its weather stations with electronic probes that could be read automatically – so since at least 1997 most of the temperature data has been collected by automatic weather stations (AWS).

Before this happened there was extensive testing of the probes – parallel studies at multiple site to ensure that measurements from the new weather stations tallied with measurements from the old liquid-in-glass thermometers.

There was even a report issued by the World Meteorological Organisation (WMO) in 1997 entitled ‘Instruments and Observing Methods’ (Report No. 65) that explained because the modern electronic probes being installed across Australia reacted more quickly to second by second temperature changes, measurements from these devices need to be averaged over a one to ten-minute period to provide some measure of comparability with the original thermometers.

This report has a 2014 edition, which the Bureau now claim to be operating under – these WMO guidelines can be downloaded here:

http://www.wmo.int/pages/prog/www/IMOP/CIMO-Guide.html .

Further, section 1.3.2.4 of Part 2 explains how natural small-scale variability of the atmosphere, and the short time-constant of the electronic probes makes averaging most desirable… and goes on to suggest averaging over a period of 1 to 10 minutes.

I am labouring this point.

So, to ensure there is no discontinuity in measurements with the transition from thermometers to electronic probes in automatic weather stations the maximum and minimum values need to be calculated from one-second readings that have been averaged over at least one minute.

Yet, in a report published just yesterday the Bureau acknowledge what I have been explaining in blog posts for some weeks, and Ken Stewart since February: that the Bureau is not following these guidelines.

In the new report, the Bureau admits on page 22 that:

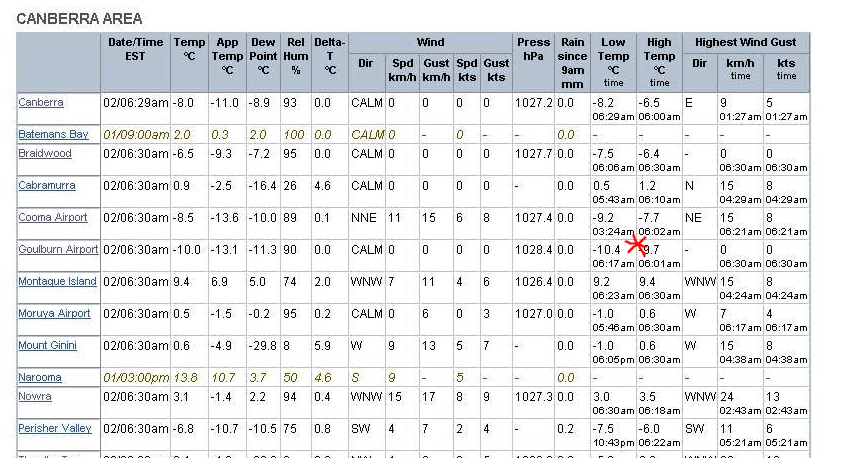

* the maximum temperature is recorded as the highest one-second temperature value in each minute interval,

*the minimum is the the lowest one-second value in the minute interval, and

* it also records the last one-second temperature value in the minute interval.

No averaging here!

Rather than averaging temperatures over one or ten minutes in accordance with WMO guidelines, the Bureau is entering one second extrema.

Recording one-second extrema (rather than averaging) will bias the minima downwards, and the maxima upwards. Except that the Bureau is placing limits on how cold an individual weather station can record a temperature, so most of the bias is going to be upwards.

****

The Bureau’s new review can be downloaded here: http://www.bom.gov.au/inside/Review_of_Bureau_of_Meteorology_Automatic_Weather_Stations.pdf

I’ve also posted on this report, and limits on low temperatures, here: https://jennifermarohasy.com.dev.internet-thinking.com.au/2017/09/vindicated-bureau-acknowledges-limits-set-cold-temperatures-can-recorded/

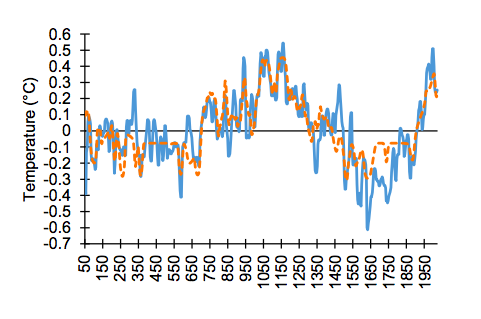

Jennifer Marohasy BSc PhD has worked in industry and government. She is currently researching a novel technique for long-range weather forecasting funded by the B. Macfie Family Foundation.

Jennifer Marohasy BSc PhD has worked in industry and government. She is currently researching a novel technique for long-range weather forecasting funded by the B. Macfie Family Foundation.