IN the comments thread following my article published at On Line Opinion yesterday, someone asked: “Does Jennifer believe that NASA and the UN are faking temperature data?”

I replied: “I don’t believe that NASA and the IPCC are faking the data: I provide compelling evidence to show this. Indeed, they, and the Bureau of Meteorology are remodelling temperature series so that they fit the theory of anthropogenic global warming. In the case of both Amberley and Rutherglen cooling trends have been changed into warming trends without any reasonable justification.”

You can read the article here: http://onlineopinion.com.au/view.asp?article=18459

And I’m republishing it here:

CELEBRITY physicist Brian Cox misled the ABC TV Q&A audience on at least 3 points-of-fact on Monday night. This is typical of the direction that much of science is taking. Richard Horton, the current editor of the medical journal, The Lancet, recently stated that, “The case against science is straightforward: much of the scientific literature, perhaps half, may simply be untrue.”

Firstly, Cox displayed an out-of-date NASA chart of remodelled global temperatures as proof that we have catastrophic climate change caused by industrial pollution. Another panellist on the program, One Nation Senator Malcolm Roberts, tried to raise the issue of cause and effect: querying whether there really was a link between rising temperature and carbon dioxide. This is generally accepted without question. But interestingly – beyond experiments undertaken by a chemist over 100 years ago – there is no real proof only unreliable computer simulation models.

Indeed, in 2006, John Nicol (a former Dean of Science at James Cook University) wrote to Penny Whetton (then meteorologist-in-charge of the climate science stream at CSIRO) asking if she could provide him with copies notes, internal reports, references (“peer reviewed” of course) which would provide details of the physics behind the hypothesis of global warming. She wrote back immediately promising to find some – which he thought was odd since he had assumed her office was stacked-to-the-ceiling with such literature.

Whetton even went to the trouble of contacting other colleagues – one of whom sent Nicol an inconsequential article in a Polish journal. After eighteen months of their exchanging letters and all of her promises to be helpful, all she could finally offer was the “scientific” section of “Climate Change in Australia 2007”. There, to Nicol’s amazement he found nothing apart from the oft quoted: “We believe that most of the increase in global temperatures during the second half of the 20th century was very likely due to increases in the concentration of atmospheric carbon dioxide”.

“Believe”, “most”, and “very likely” are jargon, perhaps meaning “we don’t have a clue”.

The chart Cox held up on Monday night – now all-over-the-internet as proof of global warming – essentially represents a remodelling of observed surface temperature measurements to confirm a belief, that we most likely have catastrophic global warming.

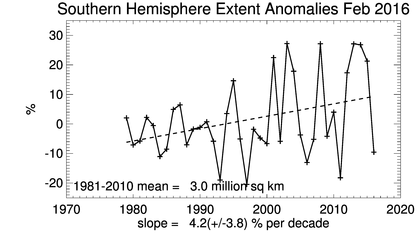

The accurate UAH satellite record shows a spike in temperatures in 1997-1998 associated with the El Nino back then, followed by a long pause of about 17 years, before the recent spike at the end of 2015-beginning of 2016. The recent spike was also caused by an El Nino event. Global-temperatures have been plummeting since March, and are now almost back to pause-levels. Indeed, Roberts was more correct than Cox, when he claimed there had been no warming for about 21 years – despite the rise in atmospheric levels of carbon dioxide.

The second misleading statement from Cox on Monday night concerned the nature of the modern sceptic – often harshly labelled a denier. Cox suggested that sceptics were the type of people that would even deny the moon-landing. In making this claim he was no doubt alluding to research, since discredited, funded by the Australian Research Council, that attempted to draw a link between scepticism of anthropogenic global warming and believing in conspiracies.

In fact, astronaut Harrison Schmitt – who actually stood on the moon, drilled holes, collected moon rocks, and has since returned to Earth – is a well-known sceptic of anthropogenic global warming. In short, Astronaut Harrison knows the moon-landing was real, but does not believe carbon dioxide plays a significant role in causing weather and climate change. In fact, Schmitt has expressed the view – a very similar view to Roberts – that the risks posed by climate change are overrated. Harrison has even suggested that climate change is a tool for people who are trying to increase the size of government – though he does not deny that he has been to the moon and back.

Thirdly, Cox has qualifications in particle physics, yet on Monday night he incorrectly stated that Albert Einstein devised the four-dimensional-space-time continuum. Those with a particular interest in the history of relativity theory know that while Einstein reproduced the Lorenz equations using a different philosophical interpretation, he was not the first to put these equations into the context of the 4-dimensional continuum – that was done by Hermann Minkowski. Minkowski reformulated in four dimensions the then-recent theory of special relativity concluding that time and space should be treated equally. This subsequently gave rise to the concept of events taking place in a unified four-dimensional space-time continuum.

Then again, Cox may not care too much for facts. He is not only a celebrity scientist, but also a rock star. Just the other day I was watching a YouTube video of him playing keyboard as the lead-singer of the band screamed, “We don’t need a reason”.

There was once a clear distinction between science – that was about reason and evidence – and art that could venture into the make-believe including through the re-interpretation of facts. This line is increasingly blurred in climate science where data is now routinely remodeled to make it more consistent with global warming theory.

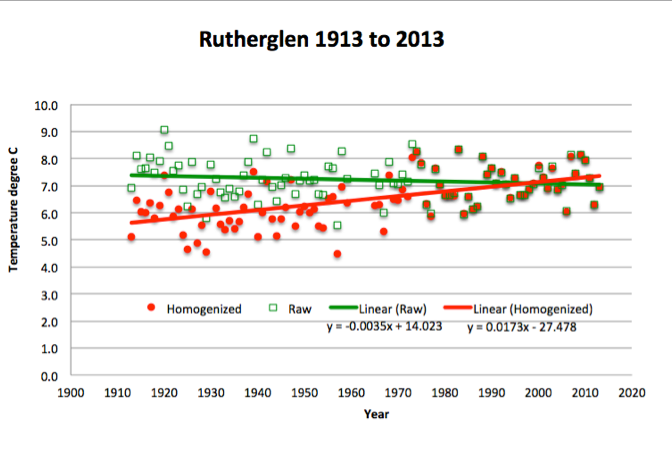

For example, I’m currently working on a 61-page expose of the situation at Rutherglen. Since November 1912, air temperatures have been measured at an agricultural research station near Rutherglen in northern Victoria, Australia. The data is of high quality, therefore, there is no scientific reason to apply adjustments in order to calculate temperature trends and extremes. Mean annual temperatures oscillate between 15.8°C and 13.4°C. The hottest years are 1914 and 2007; there is no overall warming-trend. The hottest summer was in 1938-1939 when Victoria experienced the Black Friday bushfire disaster. This 1938-39 summer was 3°C hotter than the average-maximum summer temperature at Rutherglen for the entire period: December 1912 to February 2016. Minimum annual temperatures also show significant inter-annual variability.

In short, this temperature data – like most of the series from the 112 locations used to concoct the historical temperature record by the Australian Bureau of Meteorology – does not accord with global warming theory.

So, adjustments are made by the Australian Bureau of Meteorology to these individual series before they are incorporated into the Australian Climate Observations Reference Network – Surface Air Temperature (ACORN-SAT); and also the UK Met Office’s HadCRUT dataset, which informs IPCC deliberations.

The spike in maximum temperatures in 1938-1939 is erroneously identified as a statistical error, and all temperatures before 1938 adjusted down by 0.62°C. The most significant change is to the temperature minima: all values before 1974, and 1966, are adjusted-down by 0.61°C and 0.72°C, respectively. For the year 1913, there is a 1.3°C difference between the annual raw minimum value as measured at Rutherglen and the remodelled value.

The net effect of the remodelling is to create statistically significant warming of 0.7 °C in the ACORN-SAT mean temperature series for Rutherglen: in general agreement with anthropogenic global warming theory.

NASA applies a very similar technique to the thousands of stations used to reproduce the chart that Cox held-up on Monday night during the Q&A program. I discussed these change back in 2014 with Gavin Schmidt, who oversees the production of these charts at NASA. I was specifically complaining about how they remodel the data for Amberley, a military base near where I live in Queensland.

Back in 2014, the un-adjusted mean annual maximum temperatures for Amberley – since recordings were first made in 1941 – showed temperatures trending up from a low of about 25.5°C in 1950 to a peak of almost 28.5°C in 2002. The minimum temperatures – minima are a measure of lowest temperatures – for Amberley showed cooling from about 1970. Of course this does not accord with anthropogenic global warming theory. To quote Karl Braganza from the Bureau as published by that online rag The Conversation, “Patterns of temperature change that are uniquely associated with the enhanced greenhouse effect, and which have been observed in the real world include… Greater warming in winter compared with summer… Greater warming of night time temperatures than daytime temperatures”.

So, the Bureau has “corrected” this inconvenient truth at Amberley by jumping-up the minimum temperatures twice through the homogenisation process: once around 1980 and then around 1996 to achieve a combined temperature increase of over 1.5°C.

This is obviously a very large step-change, remembering that the entire temperature increase associated with global warming over the 20th century is generally considered to be in the order of 0.9°C.

According to various peer-reviewed papers, and technical reports, homogenisation as practiced in climate science is a technique that enables non-climatic factors to be eliminated from temperature series – by making various adjustments.

It is often done when there is a site change (for example from a post office to an airport), or equipment change (from a Glaisher stand to a Stevenson screen). But at Amberley neither of these criteria can be applied. The temperatures have been recorded at the same well-maintained site within the perimeter of the air force base since 1941. Through the homogenisation process the Bureau have changed what was a cooling trend in the minimum temperature of 1.0°C per century, into a warming trend of 2.5°C per century. This has not resulted in some small change to the temperatures as measured at Amberley, but rather a change in the temperature trend from one of cooling to dramatic warming; this is also what was done to the minimum temperature series for Rutherglen – and also without justification.

NASA’s Goddard Institute for Space Studies (GISS) based in New York also applies a jump-up to the Amberley series in 1980, and makes other changes, so that the annual average temperature for Amberley increases from 1941 to 2012 by about 2°C.

The new Director of GISS, Gavin Schmidt, explained to me on Twitter back in 2014 that: “@jennmarohasy There is an inhomogenity detected (~1980) and based on continuity w/nearby stations it is corrected. #notrocketscience”.

When I sought clarification regarding what was meant by “nearby” stations I was provided with a link to a list of 310 localities used by climate scientists at Berkeley when homogenising the Amberley data.

The inclusion of Berkeley scientists was perhaps to make the point that all the key institutions working on temperature series (the Australian Bureau, NASA, and also scientists at Berkeley) appreciated the need to adjust-up the temperatures at Amberley. So, rock star scientists can claim an absolute consensus?

But these 310 “nearby” stations, they stretch to a radius of 974 kilometres and include Frederick Reef in the Coral Sea, Quilpie post office and even Bourke post office. Considering the un-adjusted data for the six nearest stations with long and continuous records (old Brisbane aero, Cape Moreton Lighthouse, Gayndah post office, Bundaberg post office, Miles post office and Yamba pilot station) the Bureau’s jump-up for Amberley creates an increase for the official temperature trend of 0.75°C per century.

Temperatures at old Brisbane aero (the closest of these stations), also shows a long-term cooling trend. Indeed perhaps the cooling at Amberley is real. Why not consider this, particularly in the absence of real physical evidence to the contrary? In the Twitter conversation with Schmidt I suggested it was nonsense to use temperature data from radically different climatic zones to homogenise Amberley, and repeated my original question asking why it was necessary to change the original temperature record in the first place. Schmidt replied, “@jennmarohasy Your question is ill-posed. No-one changed the trend directly. Instead procedures correct for a detected jump around ~1980.”

If Twitter was around at the time George Orwell was writing the dystopian fiction Nineteen Eighty-Four, I wonder whether he might have borrowed some text from Schmidt’s tweets, particularly when words like, “procedures correct” refer to mathematical algorithms reaching out to “nearby” locations that are across the Coral Sea and beyond the Great Dividing Range to change what was a mild cooling-trend, into dramatic warming, for an otherwise perfectly politically-incorrect temperature series.

Horton, the somewhat disillusioned editor of The Lancet, also stated recently that science is, “Afflicted by studies with small sample sizes, tiny effects, invalid exploratory analyses, and flagrant conflicts of interest, together with an obsession for pursuing fashionable trends of dubious importance, science has taken a turn towards darkness.” I would not go that far! I am not sure it has taken a turn for darkness – perhaps just a turn towards the make-believe. Much of climate science, in particular, is now underpinned with a postmodernist epistemology – it is simply suspicious of reason and has an acute sensitivity to the role of ideology in asserting and maintaining particular power-structures including through the homogenisation of historical temperature data.

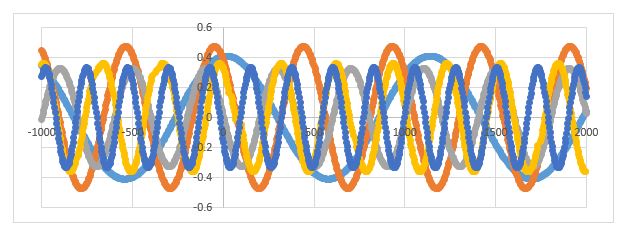

![The monthly thermometer record for Richmond, NE Australia, compared with the UAH satellite record for all of Australia. [Note, chart 1 was a plot of anomalies, this is a plot of actual temperatures.]](https://jennifermarohasy.com.dev.internet-thinking.com.au/wp-content/uploads/2016/04/UAH-Richmond-Monthly-toMarch2016.gif)

Jennifer Marohasy BSc PhD has worked in industry and government. She is currently researching a novel technique for long-range weather forecasting funded by the B. Macfie Family Foundation.

Jennifer Marohasy BSc PhD has worked in industry and government. She is currently researching a novel technique for long-range weather forecasting funded by the B. Macfie Family Foundation.